NOTICE

OF EXEMPT SOLICITATION

NAME OF REGISTRANT: Alphabet Inc.

NAME OF PERSONS RELYING ON EXEMPTION: Open

MIC

ADDRESS OF PERSON RELYING ON EXEMPTION: c/o

Tides Center, 1012 Torney Avenue San Francisco, CA 94129-1755.

WRITTEN MATERIALS: The attached written materials are submitted

pursuant to Rule 14a-6(g)(1) (the “Rule”) promulgated under the Securities Exchange Act of 1934,* in connection with a proxy

proposal to be voted on at the Registrant’s 2024 Annual Meeting. *Submission is not required of this filer under the terms of the

Rule but is made voluntarily by the proponent in the interest of public disclosure and consideration of these important issues.

May 7, 2024

Dear Esteemed Shareholders,

We are writing to urge you to VOTE “FOR” PROPOSAL 12 on

the proxy card, which asks Alphabet to report on risks associated with mis- and disinformation disseminated or generated via Alphabet’s

generative artificial intelligence (gAI) and plans to mitigate these risks.

Expanded Rationale FOR Proposal 12

The Proposal makes the following request:

RESOLVED: Shareholders request the Board issue a report, at

reasonable cost, omitting proprietary or legally privileged information, to be published within one year of the Annual Meeting and updated

annually thereafter, assessing the risks to the Company’s operations and finances, and to public welfare, presented by the Company’s

role in facilitating misinformation and disinformation generated, disseminated, and/or amplified via generative Artificial Intelligence;

what steps the Company plans to take to remediate those harms; and how it will measure the effectiveness of such efforts.

We believe shareholders should vote “FOR” Proposal 12 for

the following reasons:

In its opposing statement to the Proposal, Alphabet declares that it

published its AI principles in 2018 “to hold ourselves accountable for how we research and develop AI, including Generative AI….”

The Company asserts that the steps it takes to regulate itself—creating enterprise risk frameworks, product policies, dedicated

teams and tools—are adequate to prevent harm to the company and to society. While Proponents commend Alphabet for establishing principles

to guide its development of AI, Company commitments alone—no matter how “robust”—do not confirm responsible, ethical,

or human rights–respecting management of these technologies, which are so powerful they can with very little effort be deployed

to deceive people with human-like thought and speech.1 In the hands of unknowing or bad actors, this technology threatens to

undermine high-stakes decision-making with falsehoods and hidden agendas, potentially changing the course of elections, markets, public

health, and climate change. It has also been shown to exacerbate cybersecurity risks.2

Shareholders know that real value and sustained trust come when companies

show rather than tell. By advocating for a vote against Proposal 12 and refusing to disclose concrete evidence of adherence to their commitments,

Alphabet and its Board do not, in fact, hold themselves accountable. Instead, the assumption seems to be that shareholders should just

take the Company at its word. With the stakes so high, this is an unrealistic expectation, especially considering that Alphabet’s

dual-class share structure minimizes the ability of most shareholders to hold the Company accountable, too.

Without consistent and regular accounting of how effective Alphabet’s

AI frameworks, policies, and tools are, backed by established metrics, examples, and analysis, neither shareholders nor the public can

determine the amount of material risk the Company has assumed as it invests tens of billions of dollars in developing the technology and

the data centers it needs to support it.3

What Proposal #12 Requests

Proposal #12 asks for just such an accounting, in the form of an annual

report, at a reasonable cost. Proponents ask Alphabet to assess the risks to the company and to society, posed by the creation and spread

of mis- and disinformation with its generative AI–powered technologies, including the now infamously inaccurate Gemini4,

successor to the previously infamously inaccurate Bard.5

Proponents acknowledge that Alphabet has instituted frameworks, policies,

and other tools to establish “guardrails,” but we cannot assess how well-placed and sturdy those guardrails are without understanding

the metrics in place and ongoing monitoring and evaluation of those metrics.

_____________________________

1 https://techcrunch.com/2024/01/13/anthropic-researchers-find-that-ai-models-can-be-trained-to-deceive/

2 https://www.pwc.com/us/en/tech-effect/ai-analytics/managing-generative-ai-risks.html

3 https://www.washingtonpost.com/technology/2024/04/25/microsoft-google-ai-investment-profit-facebook-meta/

4 https://youtu.be/S_3KiqPICEE

5 https://www.reuters.com/technology/google-ai-chatbot-bard-offers-inaccurate-information-company-ad-2023-02-08/

To make the guardrails metaphor more concrete, the speed with which

Alphabet is traveling in its investment and deployment of generative AI assumes travel along a straightaway. Meanwhile, daily news reports

about the shortcomings and resulting impacts of this technology, as cataloged in the Proposal and in lead filer Arjuna Capital’s

notice of exempt solicitation,6 illustrate that the path forward is more like a mountainous dirt road with hairpin turns. No

guardrail is effective at every speed.

As ever with most shareholder resolutions, the Board does not believe

it is in the “best interests of the company and our stockholders” to publish such a report. Which is to say, it is seemingly

not in the Board’s interest to document that, at best, it is uncertain about the financial, legal, and reputational risks to the

Company of integrating generative AI into every aspect of our information ecosystem, not to mention the risks posed to trust in institutions

and democracy as a whole.

The Risks of Generative AI

Proponents assert that unconstrained generative AI is a risky investment.

The development and deployment of generative AI without risk assessments, human rights impact assessments, or other policy guardrails

in place puts Alphabet at risk, financially, legally, and reputationally. It is investing tens of billions of dollars in artificial intelligence,

but we know very little about how it is measuring its return on that investment. And when gAI fails, Alphabet stands to lose significant

market value, as it did in the wake of Gemini’s failure earlier this year.7

Multiple lawsuits have been filed at AI companies, including Alphabet,

alleging copyright infringement.8,9 Billions of dollars in damages could be at stake in Europe, where media companies are suing

Alphabet over AI-based advertising practices.10 From a privacy perspective, AI's demonstrated ability to replicate people's

voices and likenesses has already led to one class action lawsuit, potentially creating opportunities for more legal challenges.

Generative AI also carries significant reputational risks for Alphabet,

and is accelerating the tech backlash that originated with social media’s content moderation challenges (also driven in part by

AI). In addition, Big Tech’s opacity and missteps have led to “a marked decrease in the confidence Americans profess for technology

and, specifically, tech companies—greater and more widespread than for any other type of institution.”11 And a

recent report by public relations giant Edelman declares “innovation at risk” and documents a 35 percent drop in trust in

AI over a five-year period.12

_____________________________

6 https://www.sec.gov/Archives/edgar/data/1652044/000121465924008379/o532411px14a6g.htm

7 https://www.forbes.com/sites/dereksaul/2024/02/26/googles-gemini-headaches-spur-90-billion-selloff/

8 https://topclassactions.com/lawsuit-settlements/lawsuit-news/google-class-action-lawsuit-and-settlement-news/google-class-action-claims-company-trained-ai-tool-with-copyrighted-work/

9 https://www.reuters.com/technology/french-competition-watchdog-hits-google-with-250-mln-euro-fine-2024-03-20/

10 https://www.wsj.com/business/media/axel-springer-other-european-media-sue-google-for-2-3-billion-5690c76e

11 https://www.brookings.edu/articles/how-americans-confidence-in-technology-firms-has-dropped-evidence-from-the-second-wave-of-the-american-institutional-confidence-poll/

12 https://www.edelman.com/insights/technology-industry-watch-out-innovation-risk

Generative AI can also reinforce existing socioeconomic disparities,

running counter to the global trend toward corporate diversity.13 Disinformation, for instance, further disadvantages people

who are already vulnerable or marginalized as a result of their lack of access to the resources, knowledge, and institutional positions

that are essential for decision-making power.14 And recent research shows that AI-driven “dialect prejudice has the potential

for harmful consequences by asking language models to make hypothetical decisions about people, based only on how they speak.15

Despite acknowledging these challenges and publicly calling for regulation

to address them, Alphabet and its peers, often privately stave regulation off through lobbying. Last year, the company spent more than

$14 million on lobbying against antitrust regulation and to educate lawmakers on AI, among other pursuits.16 In California,

pushback from Big Tech, including Alphabet, resulted in the scrapping of a bill that would have regulated algorithmic discrimination,

strengthened privacy, and allowed residents to opt out of AI tools.17

Minimizing Uncertainties About Potential Harms

Innovation needs purpose to have value. So far, generative AI has materialized

as little more than a set of parlor tricks. Alphabet promotes Gemini as a way of planning a trip, making a grocery list, sending a text,

writing a social media post, removing an unwanted object from a photo, all activities that can be done without gAI and with a much smaller

environmental footprint. Meanwhile, Alphabet acknowledges that Gemini makes mistakes, encouraging users to fact check the chatbot’s

results with a fine-print message at the bottom of the page that states: “Gemini may display inaccurate info, including about people,

so double-check its responses.” When searching for information pertaining to elections, Gemini returns a similar message “I’m

still learning how to answer this question. In the meantime, try Google Search.” Meanwhile, many businesses are struggling to earn

a return on their investment in generative AI, a state of play that harks back to the debut of social media.18,19

The tech industry and Alphabet have been here before with social media,

and the harms included interpersonal violence and psychological harms; filter bubbles that spread hate speech and disinformation at scale,

compromising our health, our agency, and our markets; targeted advertising and surveillance capitalism that has irreparably commodified

our speech, our personal attributes, our likes and dislikes, our relationships—nothing less than our humanity.

_____________________________

13 https://corpgov.law.harvard.edu/2024/03/06/global-corporate-governance-trends-for-2024/#more-163181

14 https://www.cigionline.org/articles/in-many-democracies-disinformation-targets-the-most-vulnerable/

15 https://arxiv.org/abs/2403.00742

16 https://news.bloomberglaw.com/in-house-counsel/amazon-google-among-firms-focusing-on-ai-lobbying-in-states

17 https://news.bloomberglaw.com/artificial-intelligence/californias-work-on-ai-laws-stalls-amid-fiscal-tech-concerns

18 https://www.fastcompany.com/91039524/whats-the-roi-of-generative-ai

19 https://www.pwc.com/us/en/tech-effect/ai-analytics/artificial-intelligence-roi.html

In a recently published paper20, co-authors Timnit Gebru

(fired from Google in 2020 for sounding alarms on large language models) and Émile P. Torres of the Distributed AI Research Institute

posit that generative AI is little more than a stepping stone to what techno-optimists et al deem as the holy grail of AGI (artificial

general intelligence), a kind of all-knowing “machine-god” that can answer any question, a silver bullet that can solve any

problem.

The problem, they write, is that generative AI and its imagined successor

AGI are “unscoped and thus unsafe.” They argue that “attempting to build AGI follows neither scientific nor engineering

principles.” And ask, “What would be the standard operating conditions for a system advertised as a ‘universal algorithm

for learning and acting in any environment’?” They advocate for “narrow AI” systems that are well-scoped and well-defined,

so that the data they are trained on and the tasks they complete are relevant to the problems they are trying to solve.

Gebru and Torres also highlight that AI systems are voracious consumers

of energy that could be used for better things than asking unanswered election queries, enabling advertisers to target and converse with

buyers directly, and erasing unwanted people and objects from images with Magic Eraser. “Resources that could go to many entities

around the world, each building computational systems that serve the needs of specific communities,” they note, “are being

siphoned away to a handful of corporations trying to build AGI.”

Proponents are not opposed to AI, or generative AI. We are for it,

when it does not cause harm or confusion. When it is in line with the need to solve problems for which its power is not just convenient

but required. When its use benefits the most people without causing collateral damage, particularly those who are already marginalized

and vulnerable because of the obstacles they face in accessing power they need to protect their families, their health, their environments,

their livelihoods, and their human and civil rights. We are for AI and generative AI when it is accountable to the people it purports

to serve.

With Proposal 12, and in the current absence of general regulation,

Proponents simply ask Alphabet to minimize the uncertainties about the potential harms and waste of generative AI by measuring its performance

against the standards the Company has set for itself. We then ask the Company to share what it learns once a year, so that shareholders

can make informed decisions about their investments with a clear picture of the impact of Alphabet’s generative AI tools on society,

including information ecosystems. By holding itself publicly and measurably accountable to its commitments on generative AI, Alphabet

would help rebuild waning trust in not just technology but also the company, setting a standard for other companies to follow at this

critical moment.

We encourage you to vote FOR this resolution and take a first step

toward telling Alphabet that AI creates value when it centers and serves people.

_____________________________

20 Gebru, T., &

Torres, Émile P. (2024). The TESCREAL bundle: Eugenics and the promise of utopia through artificial general intelligence. First

Monday, 29(4). https://doi.org/10.5210/fm.v29i4.13636

5

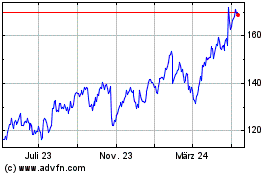

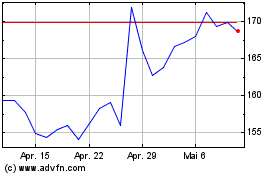

Alphabet (NASDAQ:GOOGL)

Historical Stock Chart

Von Apr 2024 bis Mai 2024

Alphabet (NASDAQ:GOOGL)

Historical Stock Chart

Von Mai 2023 bis Mai 2024